BigQuery Pros

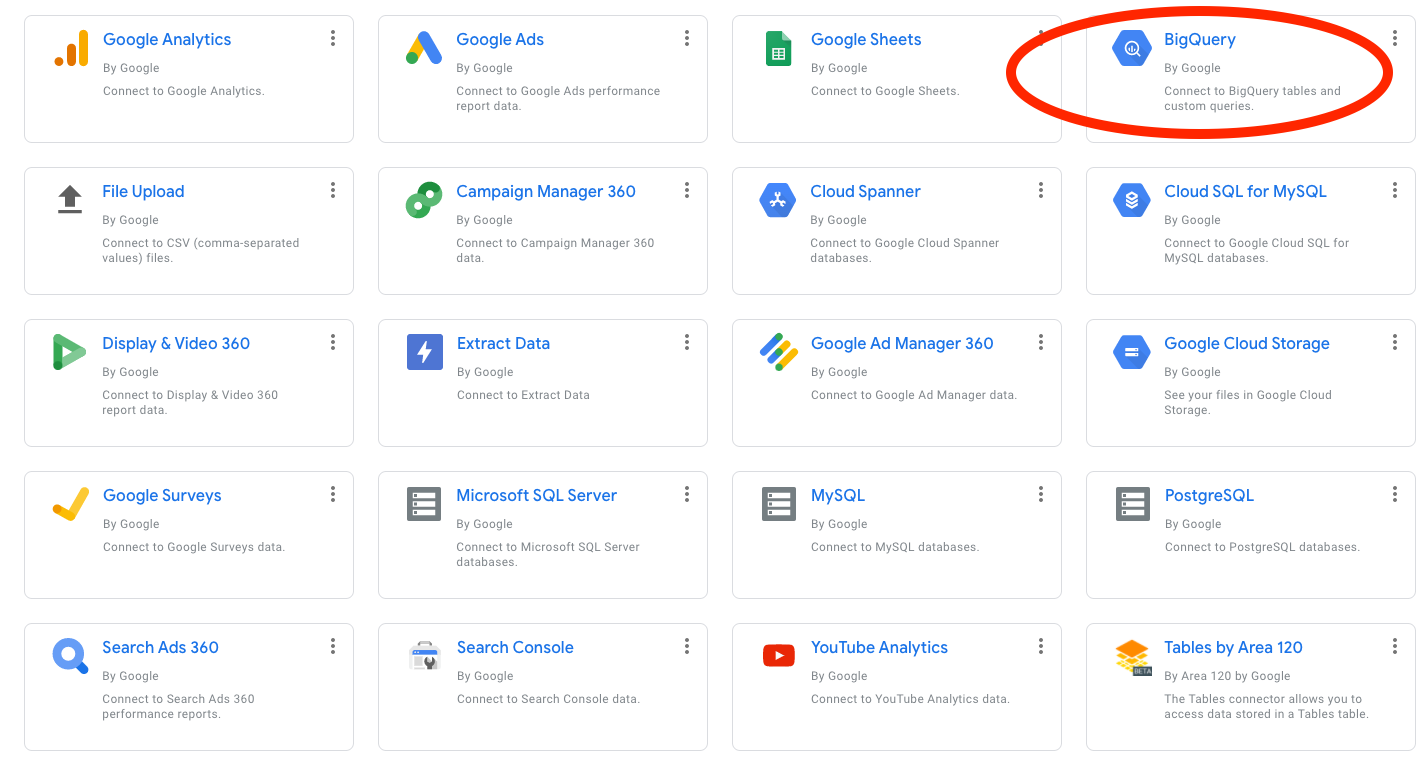

- Serverless, it is easy to handle large size data

- Reducing architecture costs

- Optimal availability

- Easy integration with tools like Data Studio and Google Analytics

Oleksii Vasyliev, Railsware

Brought to you by Alexey Vasiliev, Railsware

resource "google_bigquery_table" "t_user_agent_info" {

dataset_id = google_bigquery_dataset.datasources_redshift.dataset_id

table_id = "t_user_agent_info"

schema = file("datasources_redshift/t_user_agent_info.json")

}resource "google_bigquery_table" "v_users_with_failed_transactions" {

dataset_id = google_bigquery_dataset.datasources_stripe.dataset_id

table_id = "v_users_with_failed_transactions"

description = "Failed transactions"

view {

query = file(

"datasources_stripe/v_users_with_failed_transactions.sql"

)

use_legacy_sql = false

}

}resource "google_bigquery_table" "gs_ga_sources" {

dataset_id = google_bigquery_dataset.datasources_gsheets.dataset_id

table_id = "gs_ga_sources"

external_data_configuration {

autodetect = true

# support Bigtable, Google Sheets, Drive, Google Storage (formats: Avro, Parquet, Orc, CSV, JSON)

source_format = "GOOGLE_SHEETS"

google_sheets_options {

skip_leading_rows = 1

range = "custom_range"

}

source_uris = [

"https://docs.google.com/spreadsheets/d/example/"

]

}

}SELECT c.customer_id, c.name, rq.first_order_date

FROM mydataset.customers AS c

LEFT OUTER JOIN EXTERNAL_QUERY(

'us.connection_id',

'''SELECT customer_id, MIN(order_date) AS first_order_date

FROM orders

GROUP BY customer_id''') AS rq ON rq.customer_id = c.customer_id

GROUP BY c.customer_id, c.name, rq.first_order_date;

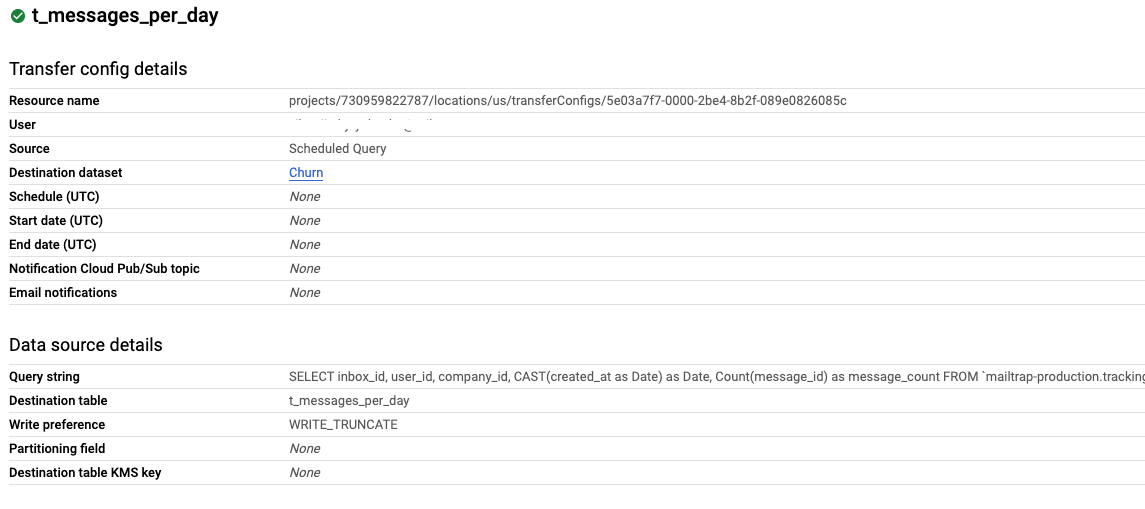

resource "google_bigquery_data_transfer_config" "st_messages_per_day" {

display_name = "st_messages_per_day"

location = "us"

data_source_id = "scheduled_query"

schedule = "every day 01:00"

destination_dataset_id = google_bigquery_dataset.datasources_tracking.dataset_id

params = {

destination_table_name_template = "st_messages_per_day"

write_disposition = "WRITE_APPEND"

query = file("datasources_tracking/st_messages_per_day.sql")

}

}

resource "google_bigquery_routine" "pt_urldecode" {

dataset_id = google_bigquery_dataset.serverless_tracking_pixel.dataset_id

routine_id = "PT_URLDECODE"

routine_type = "SCALAR_FUNCTION"

description = "Function decode encoded url data"

language = "SQL"

arguments {

name = "url"

data_type = jsonencode({

typeKind = "STRING"

})

}

return_type = jsonencode({

typeKind = "STRING"

})

definition_body = trimspace(chomp(file("${path.module}/serverless_tracking_pixel/pt_urldecode.sql")))

}(

SELECT SAFE_CONVERT_BYTES_TO_STRING(

ARRAY_TO_STRING(ARRAY_AGG(

IF(STARTS_WITH(y, '%'), FROM_HEX(SUBSTR(y, 2)), CAST(y AS BYTES)) ORDER BY i

), b''))

FROM UNNEST(REGEXP_EXTRACT_ALL(url, r"%[0-9a-fA-F]{2}|[^%]+")) AS y WITH OFFSET AS i

)

SELECT ...

`serverless_pixel.PT_URLDECODE`(REGEXP_EXTRACT(httpRequest.requestUrl, "ec=([^&]+)")) AS category,

`serverless_pixel.PT_URLDECODE`(REGEXP_EXTRACT(httpRequest.requestUrl, "el=([^&]+)")) AS label,

`serverless_pixel.PT_URLDECODE`(REGEXP_EXTRACT(httpRequest.requestUrl, "eld=([^&]+)")) AS label_data,

resource "google_bigquery_routine" "pt_decrypt" {

dataset_id = google_bigquery_dataset.serverless_tracking_pixel.dataset_id

routine_id = "PT_DESCRYPT"

routine_type = "SCALAR_FUNCTION"

description = "Function decrypt AES-256-GCM values"

language = "JAVASCRIPT"

determinism_level = "DETERMINISTIC"

arguments {

name = "encodedStr"

argument_kind = "FIXED_TYPE"

data_type = jsonencode({

typeKind = "STRING"

})

}

return_type = jsonencode({

typeKind = "STRING"

})

definition_body = trimspace(chomp(templatefile("${path.module}/serverless_tracking_pixel/pt_decrypt.js.tpl", {

secret_key = var.serverless_secret_key

})))

imported_libraries = [

"gs://.../file.js"

]

}if (!encodedStr) {

return '';

}

const [version, encoded, iv, authTag] = encodedStr.split('--');

if (!version || !encoded || !iv || !authTag) {

return '';

}

try {

const decipher = forge.cipher.createDecipher(

'AES-GCM',

forge.util.createBuffer(forge.util.decode64("${secret_key}"))

);

decipher.start({

iv: forge.util.createBuffer(forge.util.decode64(iv)),

additionalData: '',

tagLength: 128,

tag: forge.util.createBuffer(forge.util.decode64(authTag))

});

decipher.update(forge.util.createBuffer(forge.util.decode64(encoded)));

const pass = decipher.finish();

if (pass) {

return decipher.output.data;

} else {

return JSON.stringify({decrypt_error: 'invalid_data'});

}

} catch(e) {

return JSON.stringify({decrypt_error: JSON.stringify(e)});

}variable "serverless_secret_key" {

type = string

sensitive = true

}TF_VAR_serverless_secret_key: ${{ secrets.SERVERLESS_SECRET_KEY }}